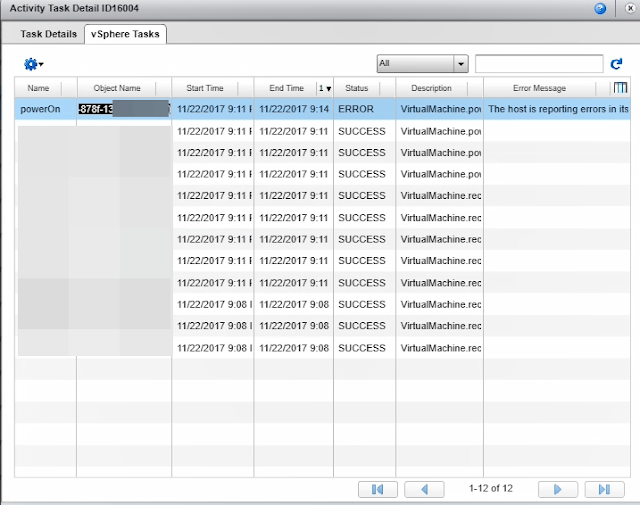

When I was trying to power on a vApp with 12 VMs in vCloud Director the power on operation failed due to one VM was unable to power on the ESXi host with this error "The host is reporting errors in its attempts to provide vSphere HA support"

++++++++++++++++

Underlying system error: com.vmware.vim.binding.vim.fault.HAErrorsAtDest

vCenter Server task (moref: task-689) failed in vCenter Server 'TEST-VC1' (73dc8fb7-28d6-41b3-86dd-09126c88aebe).

- The host is reporting errors in its attempts to provide vSphere HA support.

+++++++++++++++

I was searching for the fault message vim.fault.HAErrorsAtDest and got the information from the http://pubs.vmware.com/,

http://pubs.vmware.com/vsphere-6-5/index.jsp?topic=/com.vmware.wssdk.apiref.doc/vim.fault.HAErrorsAtDest.html

Fault Description

The destination compute resource is HA-enabled, and HA is not running properly. This will cause the following problems:

1) The VM will not have HA protection.

2) If this is an intracluster VMotion, HA will not be properly informed that the migration completed.

This can have serious consequences for the functioning of HA.

The error is telling something had gone wrong with vSphere HA. So I went to see the ESXi host where this VM was trying to power to know what caused the power-on operation failed.

|

From the task and events of the host TEST-ESX50 found that this host is not communicating with the HA MASTER node properly.

++++++++++++++++++++++++++++++++++++++++++++++++++++++

11/22/2017 21:12 | The vSphere HA availability state of this host has changed to Unreachable

11/22/2017 21:12 | vSphere HA agent on host TEST-ESX50 connected to the vSphere HA master on host TEST-ESX55

11/22/2017 21:12 | The vSphere HA availability state of this host has changed to Slave

11/22/2017 21:12 | vSphere HA agent is healthy

11/22/2017 21:12 | Successfully restored access to volume 5540a613-1683deb8-5622-0025b552073e (TEST-Datastore2) following connectivity issues.

11/22/2017 21:12 | Lost access to volume 56d03783-18ad0808-f7bc-0025b552073e (TEST-Datastore1) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly.

11/22/2017 21:13 | The vSphere HA availability state of this host has changed to Unreachable

11/22/2017 21:13 | vSphere HA agent on host TEST-ESX50 connected to the vSphere HA master on host TEST-ESX55

11/22/2017 21:13 | The vSphere HA availability state of this host has changed to Slave

11/22/2017 21:13 | vSphere HA agent is healthy

11/22/2017 21:14 | The vSphere HA availability state of this host has changed to Unreachable

11/22/2017 21:14 | Successfully restored access to volume 56d03785-18ad0808-f7bc-0025b552073e (TEST-Datastore1) following connectivity issues.

11/22/2017 21:14 | Lost access to volume 56d037d2-b860fed8-5593-0025b559073e (TEST-Datastore3) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly.

11/22/2017 21:14 | Successfully restored access to volume 56d037d2-b860fed8-5593-0025b559073e (TEST-Datastore3) following connectivity issues.

11/22/2017 21:14 | Lost access to volume 546b6e62-30bd5d48-be59-0025c552073e (TEST-Datastore5) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly.

11/22/2017 21:14 | DRS migrated TEST-VM1 (58c44bb8-bb81-4207-9ffa-b25de465b79c) from TEST-ESX46

11/22/2017 21:14 | Successfully restored access to volume 546b6e62-30bd5d48-be59-0025c552073e (TEST-Datastore5) following connectivity issues.

11/22/2017 21:14 | Lost access to volume 546b6ea1-cdbde74a-8cf1-0025b552073e (TEST-Datastore10) due to connectivity issues. Recovery attempt is in progress and outcome will be reported shortly.

11/22/2017 21:14 | vSphere HA agent on host TEST-ESX50 connected to the vSphere HA master on host TEST-ESX55

11/22/2017 21:14 | The vSphere HA availability state of this host has changed to Slave

11/22/2017 21:14 | vSphere HA agent is healthy

++++++++++++++++++++++++++++++++++++++++++++++++

From the fdm.log of the ESXi host TEST-ESX50 ( SLAVE)

++++++++++++++++++++++++++++++++++++++++++++++++

2017-11-22T21:13:34.526Z TEST-ESX50 Fdm: error fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] [ClusterPersistence::VersionChange] Fetch timeout of 2 seconds from host host-44,192.168.1.100 for version [1] 16698

2017-11-22T21:13:34.526Z TEST-ESX50 Fdm: verbose fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] [ClusterManagerImpl::AddBadIP] IP 192.168.1.100 marked bad for reason Unreachable IP

2017-11-22T21:13:34.526Z TEST-ESX50 Fdm: info fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] [ClusterPersistence::VersionChange] fetching version[1] 16698 from host-44,192.168.1.100

2017-11-22T21:13:34.528Z TEST-ESX50 Fdm: info fdm[3E34B70] [Originator@6876 sub=Cluster] [ClusterManagerImpl::NewClusterConfig] version 16698

2017-11-22T21:13:34.529Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Cluster] [ClusterManagerImpl::Uncompress] Uncompressed from size 8135 to size 116147

2017-11-22T21:13:34.535Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Cluster] [ClusterManagerImpl::UpdatePersistentObject] name clusterconfig version (16698 ?> 16697) force false

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Invt] [InventoryManagerImpl::Handle(ClusterConfigNotification)] Processing cluster config

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Invt] [InventoryManagerImpl::UpdateAgentVms] Number of required agents vms changed to 0.

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Simulator] [Processing cluster config

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Simulator] numPowerOpsPerMinute=0, numResOpsPerMinute=0, sendInterval=0

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Simulator] waitTime=60000, numPowerOps=0, numResOps=0

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: verbose fdm[3E34B70] [Originator@6876 sub=Cluster] Processing cluster config

2017-11-22T21:13:34.536Z TEST-ESX50 Fdm: info fdm[3FBAB70] [Originator@6876 sub=Cluster opID=SWI-1b53be18] [ClusterManagerImpl::StoreDone] Wrote cluster-config version 16698

2017-11-22T21:13:43.540Z TEST-ESX50 Fdm: verbose fdm[3E75B70] [Originator@6876 sub=Election opID=SWI-60b7acd9] CheckVersion: Version[2] Other host GT : 821635 > 821634

2017-11-22T21:13:43.540Z TEST-ESX50 Fdm: verbose fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] [ClusterPersistence::VersionChange] version[2] 821635 from host-44,192.168.1.100

2017-11-22T21:13:43.540Z TEST-ESX50 Fdm: info fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] [ClusterPersistence::VersionChange] fetching version[2] 821635 from host-44,192.168.1.100

2017-11-22T21:13:44.541Z TEST-ESX50 Fdm: info fdm[3E75B70] [Originator@6876 sub=Election opID=SWI-60b7acd9] Slave timed out

2017-11-22T21:13:44.541Z TEST-ESX50 Fdm: info fdm[3E75B70] [Originator@6876 sub=Election opID=SWI-60b7acd9] [ClusterElection::ChangeState] Slave => Startup : Lost master

2017-11-22T21:13:44.541Z TEST-ESX50 Fdm: info fdm[3E75B70] [Originator@6876 sub=Cluster opID=SWI-60b7acd9] Change state to Startup:0

+++++++++++++++++++++++++++++++++++++++++++++++

From the fdm.log of the ESXi host TEST-ESX45 ( MASTER)

++++++++++++++++++++++++++++++++++++++++++++++++

2017-11-22T21:12:07.619Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Starting datastore heartbeat checking for slave host-32

2017-11-22T21:12:08.620Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:09.622Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:10.625Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:11.627Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:12.630Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:13.631Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Heartbeat still pending for slave @ host-32

2017-11-22T21:12:14.632Z TEST-ESX45 Fdm: error fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Timeout for slave @ host-32

2017-11-22T21:12:14.632Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Marking slave host-32 as unreachable

2017-11-22T21:12:14.632Z TEST-ESX45 Fdm: verbose fdm[43EAB70] [Originator@6876 sub=Cluster opID=SWI-3ab50c2a] Beginning ICMP pings every 1000000 microseconds to host-32

2017-11-22T21:12:14.635Z TEST-ESX45 Fdm: info fdm[483AB70] [Originator@6876 sub=Invt opID=SWI-33316784] [HostStateChange::SaveToInventory] host host-32 changed state: FDMUnreachable

2017-11-22T21:12:14.635Z TEST-ESX45 Fdm: verbose fdm[483AB70] [Originator@6876 sub=Vmcp opID=SWI-33316784] Canceling VM reservation for non-live host host-32

2017-11-22T21:12:14.635Z TEST-ESX45 Fdm: verbose fdm[483AB70] [Originator@6876 sub=PropertyProvider opID=SWI-33316784] RecordOp ASSIGN: slave["host-32"], fdmService. Applied change to temp map.

2017-11-22T21:12:15.448Z TEST-ESX45 Fdm: verbose fdm[48BCB70] [Originator@6876 sub=Cluster] FDM_SM_SLAVE_MSG with id host-32 (192.168.1.36)

++++++++++++++++++++++++++++++++++++++++++++++++

So it is clear that the Slave had a communication problem with the HA Master very frequently. The vApp VM from vCloud director was trying to power on when the master host declared this host as unreachable (Marking slave host-32 as unreachable).

Reconfigured the vSphere HA on the cluster to fix the HA issues. Post re-configuration of HA the power-on operation went fine.

Read: http://pubs.vmware.com/vsphere-6-5/index.jsp?topic=/com.vmware.wssdk.apiref.doc/vim.fault.HAErrorsAtDest.html

https://docs.vmware.com/en/VMware-vSphere/6.5/vsphere-esxi-vcenter-server-65-troubleshooting-guide.pdf ( section : Troubleshooting Availability)

Comments

Post a Comment

Please leave your valuable comment to improvise the content.